Deploy your first backend automation with Ryax

This tutorial introduces you to the essentials of Ryax usage through its web interface by creating a backend system for a video detection app. The goal of this workflow is to process a video to detect object displayed on the video.

As an example, we will create a backend workflow called “Detect objects on videos”: it will allow users to upload their own videos, run object detections on them and publish them onto an external service.

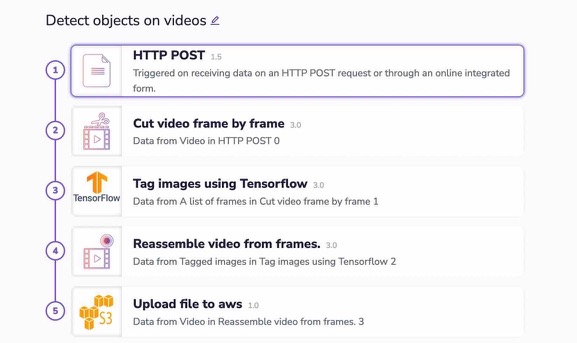

Here is a step-by-step description of this backend workflow:

- Open an upload form for users to input their video.

- Cut the video frame by frame to allow single-image processing by the object detection algorithm.

- Run video frames through a LiveNet object detection algorithm

- Reassemble the frames into a result video.

- Upload result video to an AWS S3 bucket (optional step)

For more information about this workflow, please refer to its complete documentation.

1. Assembling our first backend automation

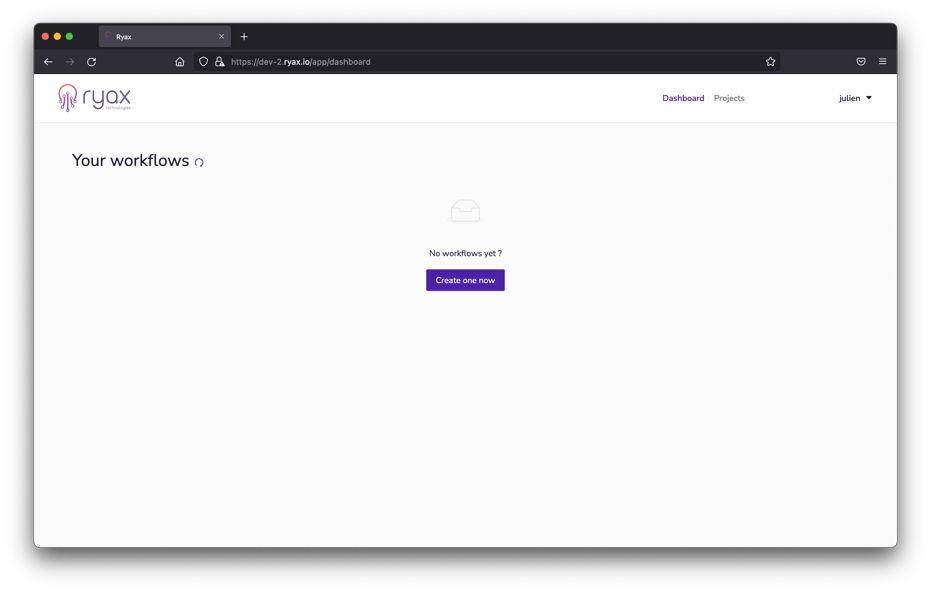

In this first part of the guide, we will assemble a complete backend workflow only using existing default public actions in Ryax. To start with, let’s log into Ryax and create a new workflow from the Dashboard page by clicking on “Create one now”:

For example, we’ll name this new workflow “Detect objects on videos”.

Click “OK”, you are now ready to start assembling your backend workflow!

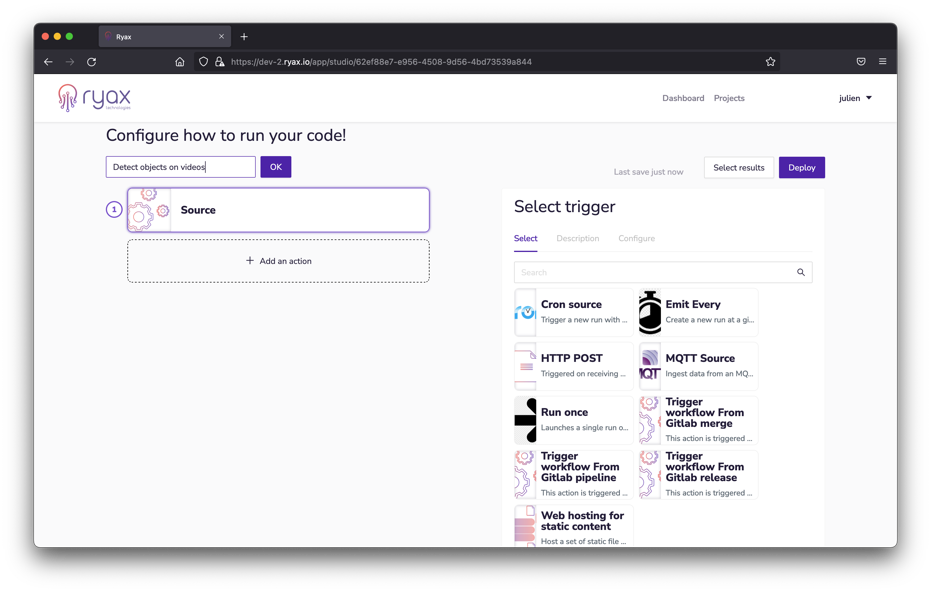

1.1 Defining the workflow’s trigger

The first ‘step’ of a Ryax workflow is called a ‘trigger’. Triggers are the heart of backend automations, they allow for controllable, precise, reliable actuations of a processing chain. Triggers actively listen for external events or user inputs such as API calls, movements on a server, messages, database updates (etc) and start the workflow when specified.

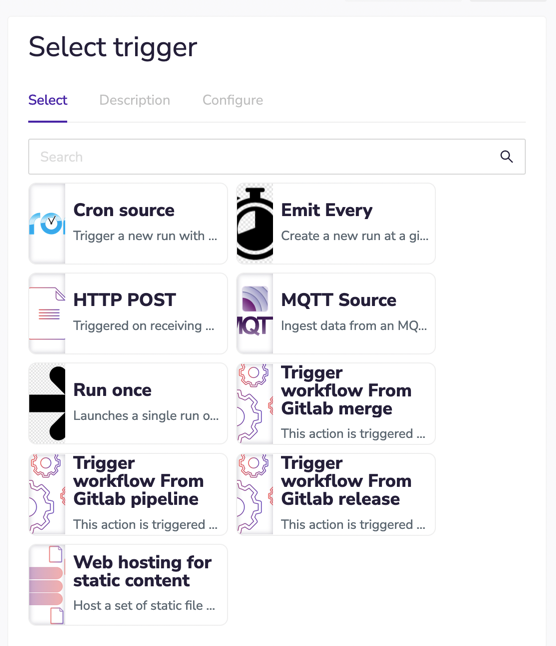

In our platform, building your workflow will mean choosing a trigger to start with: and you’ll do it with the panel you see on the right. Lots of options to choose from 🙂

For more details on Ryax concepts, take a look here: Ryax concepts

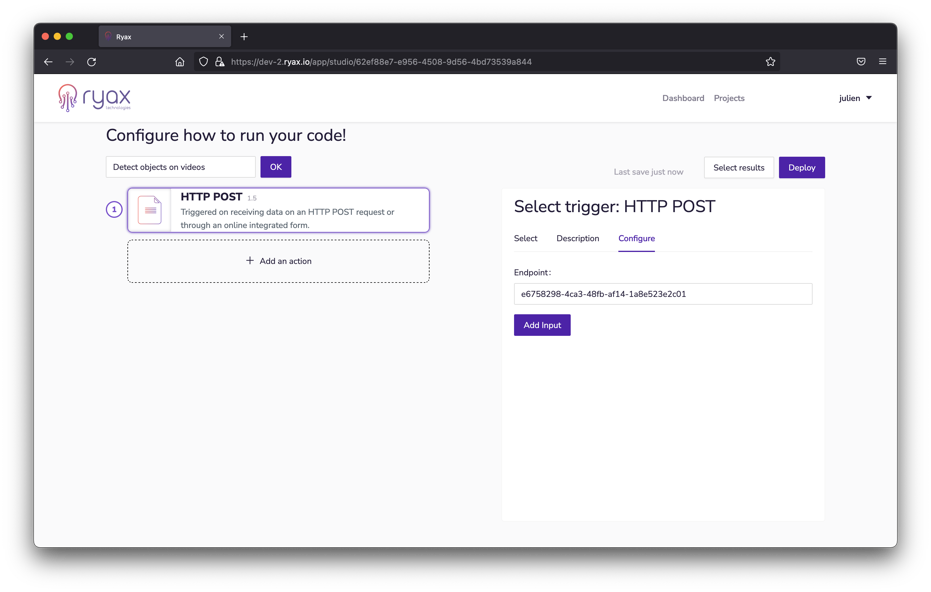

For our example, we’ll choose an “HTTP POST” trigger:

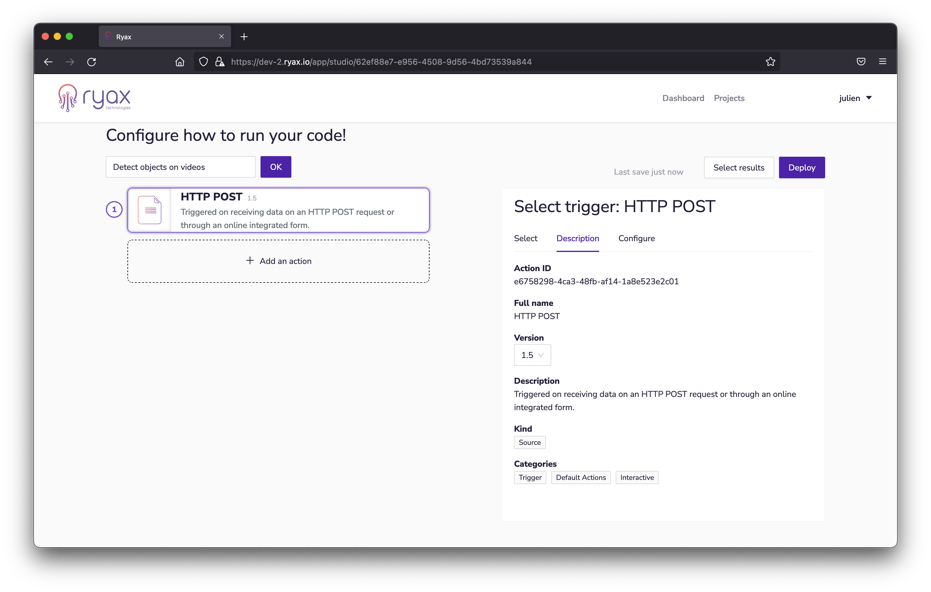

This trigger will generate and display a UI form that users can interact with. You can check out the “Description” tab to the right for a quick description of any action or trigger in Ryax.

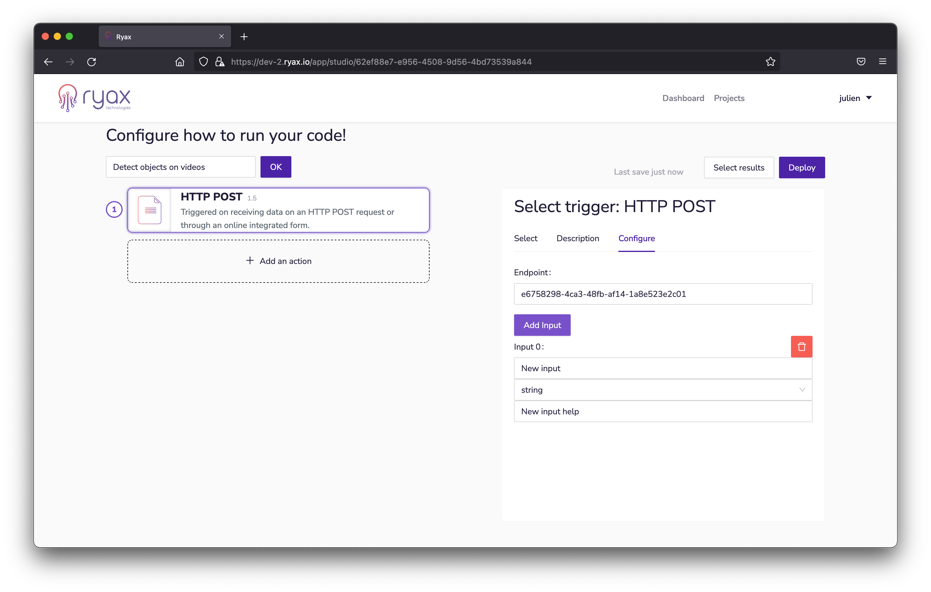

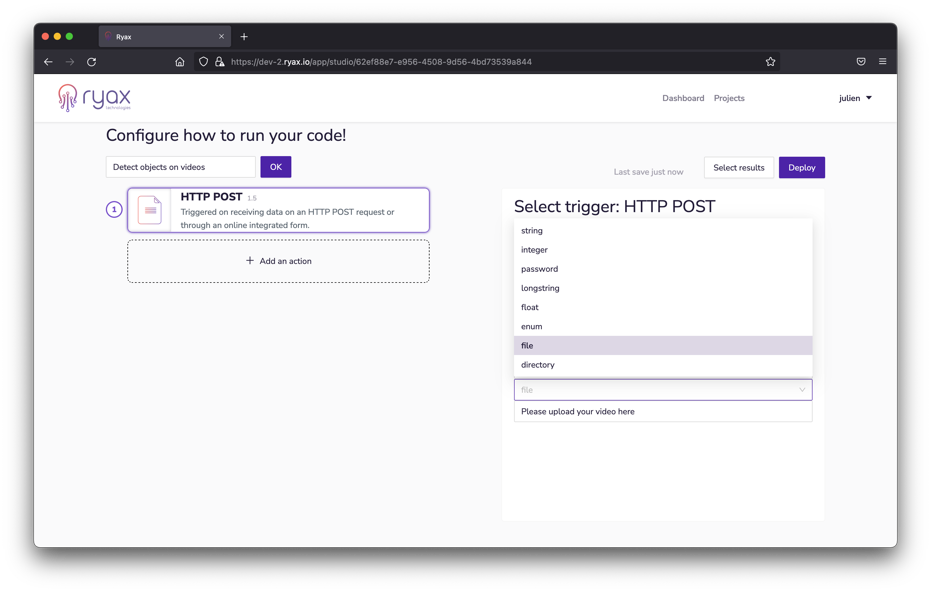

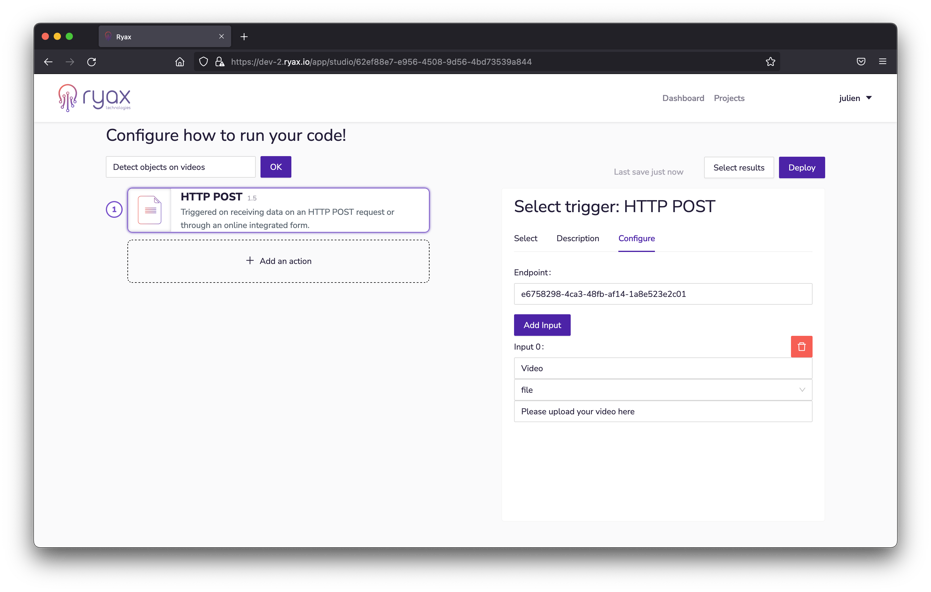

Now we’ll configure the form so it can take files as inputs, let’s go back to the “Configure” tab and click the “Add Input” button:

Since we want users to be able to upload video files to our form, we’ll selection “file” as the Input 0 type:

We also used the other fields to name and describe the expected user input while we were at it: this will help users know what the form will actually do.

1.2 Adding actions to our backend workflow

Next step is to add actions to the processing chain.

In Ryax, actions perform automated operations like sending messages, storing data, executing an ML algorithm, training a model, branching in a Git, perfoming a search, etc. Actions can ingest data from any given point of a workflow, process it and pass it downstream for another action to take over. Chaining actions up together is what truly creates end-to-end backend automations!

For more details on Ryax concepts, take a look here: Ryax concepts

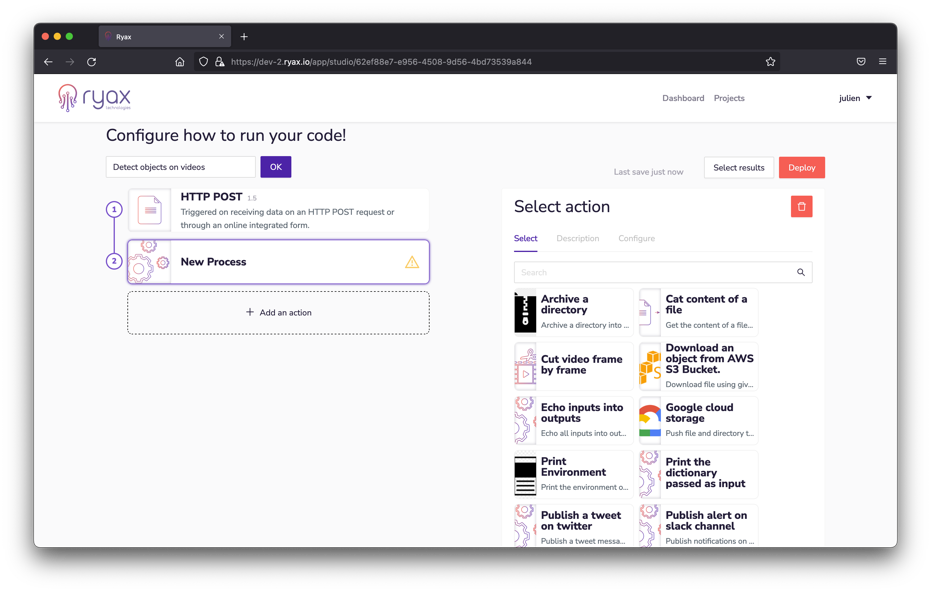

To add our first action, we’ll click on the “Add an action” button at the bottom of our workflow:

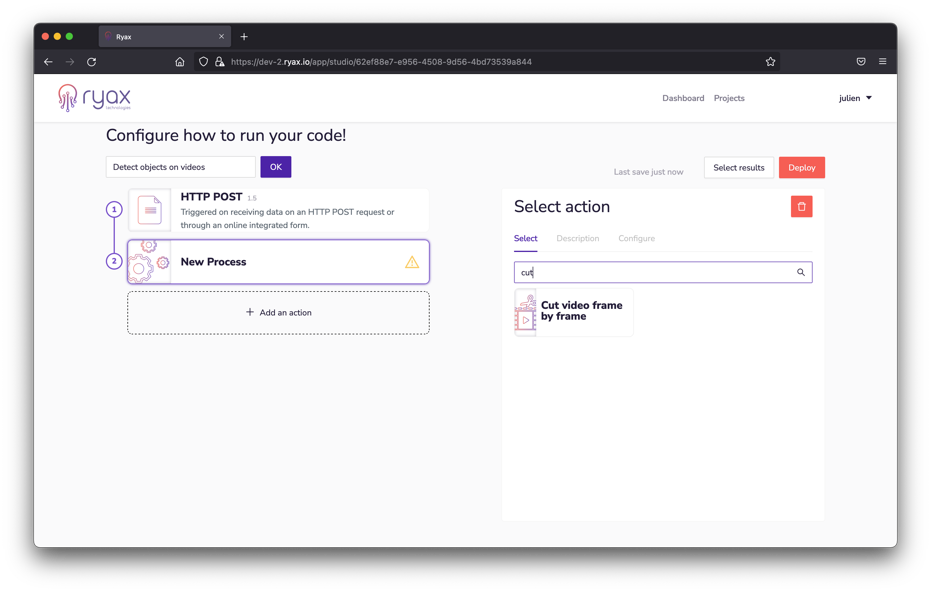

This will lead us into the action selection pane to the right. At this point we want to cut the video frame by frame, so we can search for “cut…” in the search box:

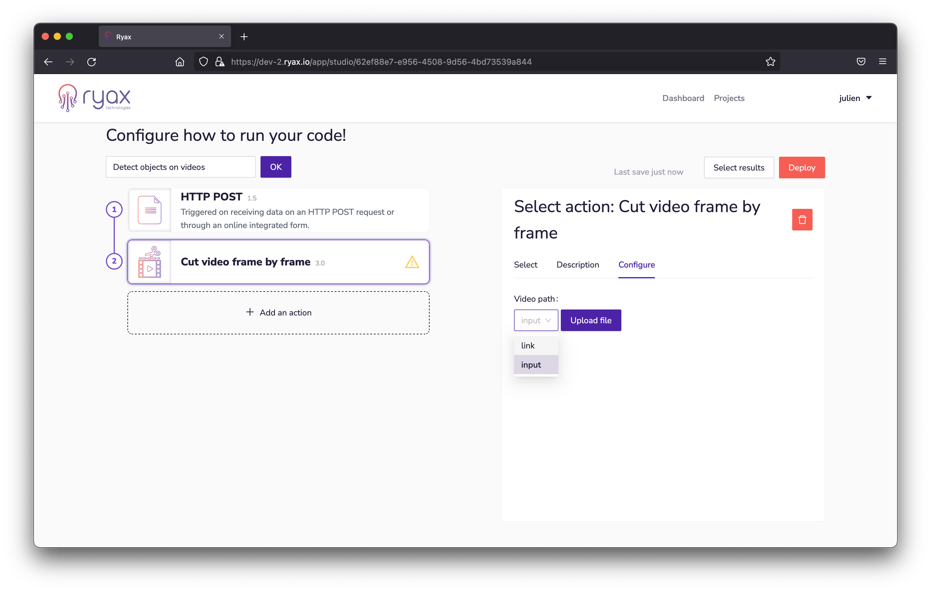

Let’s click the “Cut video frame by frame” action to add it to our workflow. We’re automatically shown the “configure” tab that’ll allow us to set up the action. Now, we want this action to ingest the uploaded video and process it. The way to do it in Ryax is to use the “link” input type: this allows us to use any data point of our workflow as an input to an action. Let’s choose the “link” option for our video’s path:

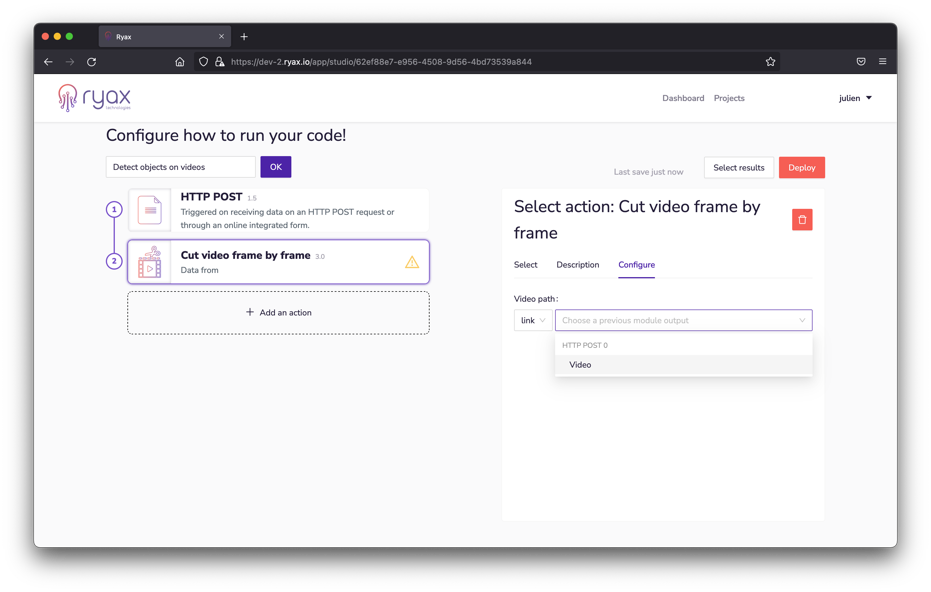

Now we are able to choose the previous action’s output: the video uploaded by our user. We can select the “video” output from the action “HTTP POST 0”:

Our “cut video” action is set up and working at this point 🙂

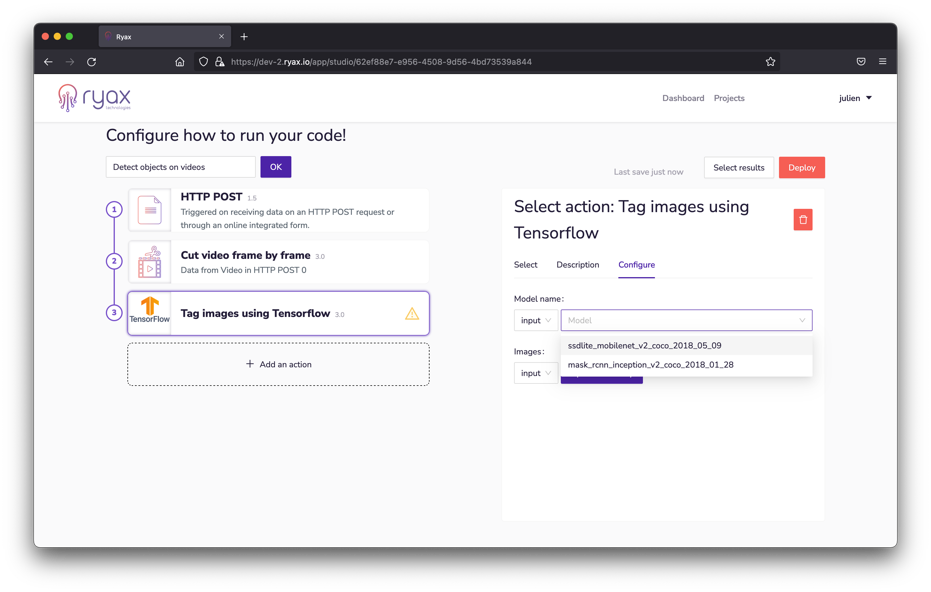

Let’s add another action below: we’ll choose the “Tag images using TensorFlow” algorithm.

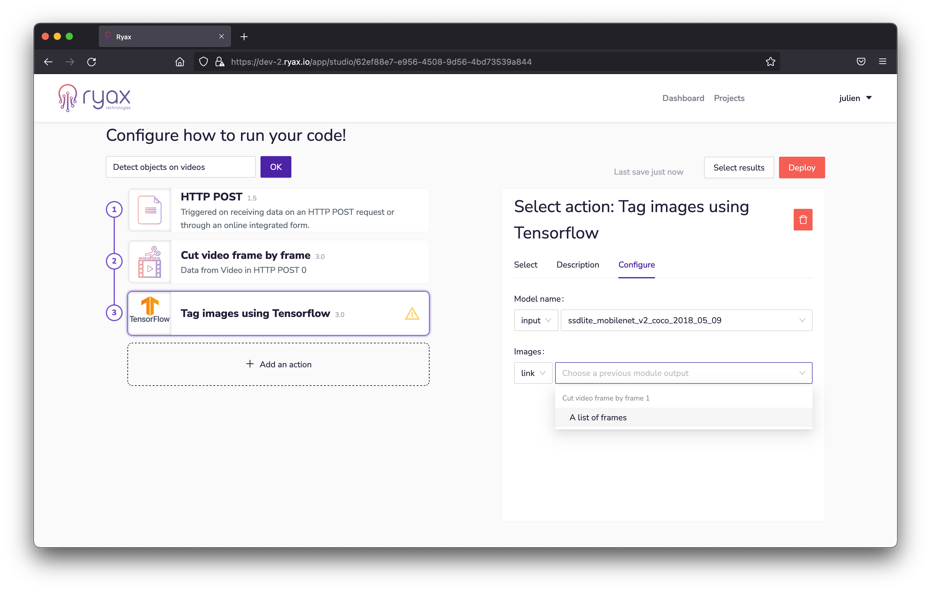

Two different models are available for this algorithm, we’ll choose the MobileNet version for now. In the same spirit as before, we’ll choose the ‘link’ input type for the ‘images’ input of this action:

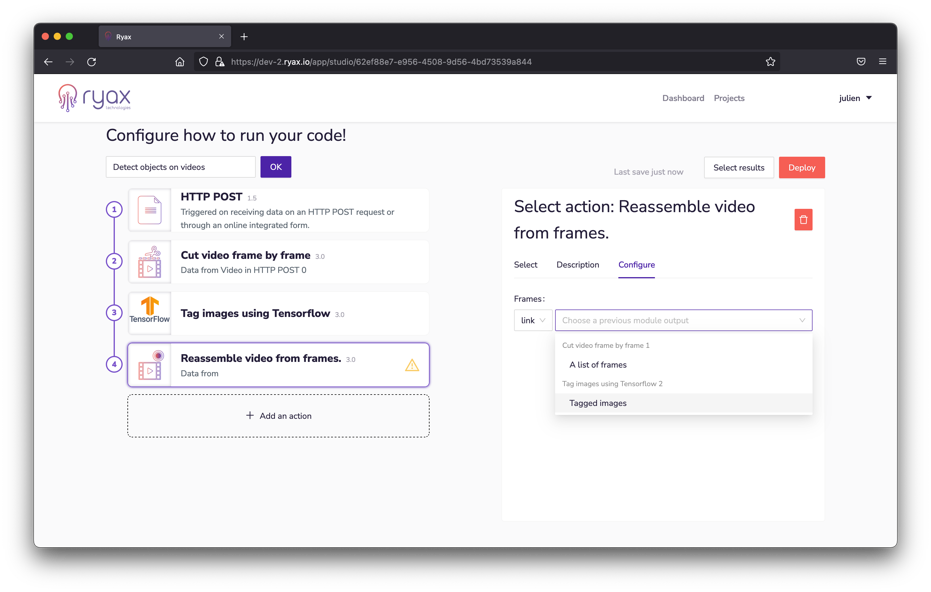

Now we can move on and add another action below: “Reassemble video from frames”. Same as earlier, we’ll use the ‘link’ input type to feed this step of our workflow. Let’s select the tagged images as an input:

1.3 Wrapping up our workflow (optional step)

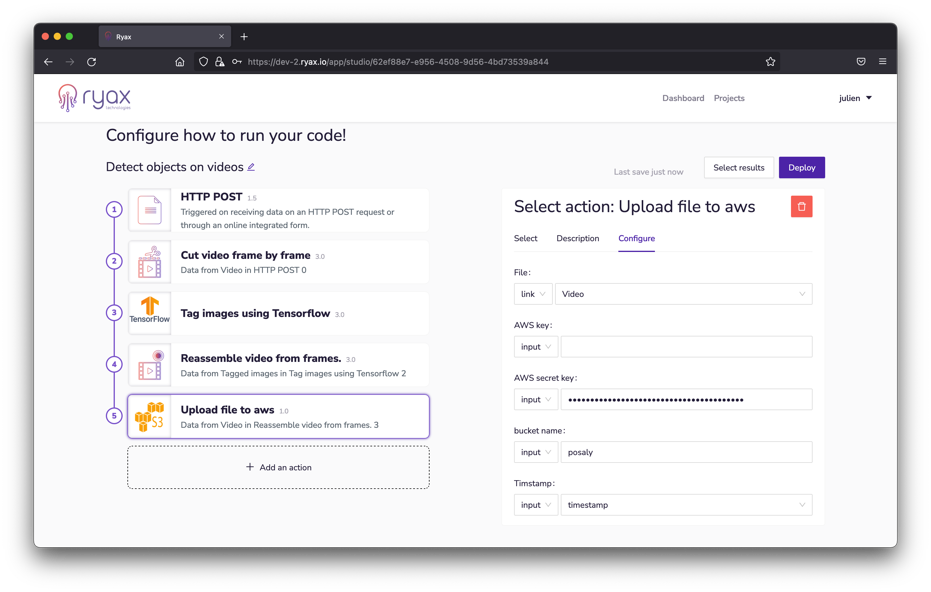

We’re almost there! We’ll add one last action to our backend workflow: “Upload file to AWS”. For this one we’ll use the ‘link’ input again to ingest our result video, and you can use one of your AWS S3 buckets credentials to fill in the other inputs:

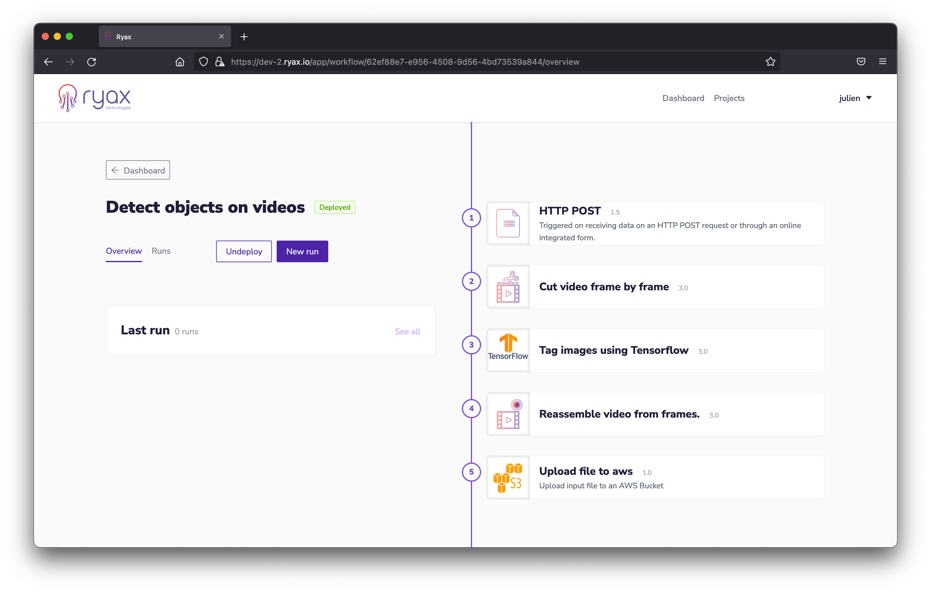

At this point we have a fully configured workflow ready to run!

2. Deploying and running our backend workflow

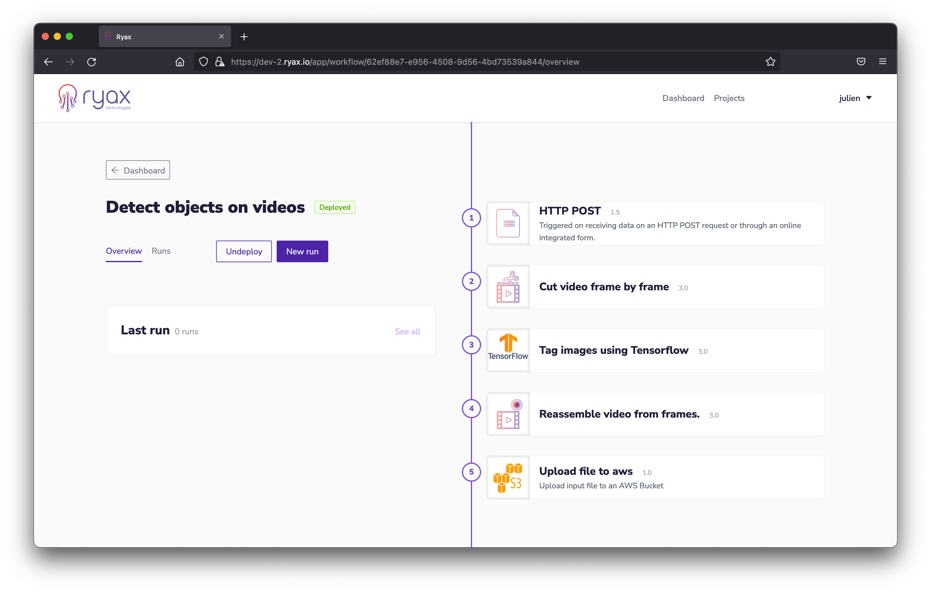

The workflow is displaying no errors and shows a “deploy” button at the top right part of the panel: you can now click the “deploy” button! It will lead you to the “run” management page:

At this stage, our workflow is in a “deployed” state. Once deployed, your workflow enters a ready state where it can be triggered, receive inputs and propagate. Workflow actuations are called “runs” in Ryax: everytime a workflow is triggered, it generates a run.

For more details on Ryax concepts, take a look here: Ryax concepts

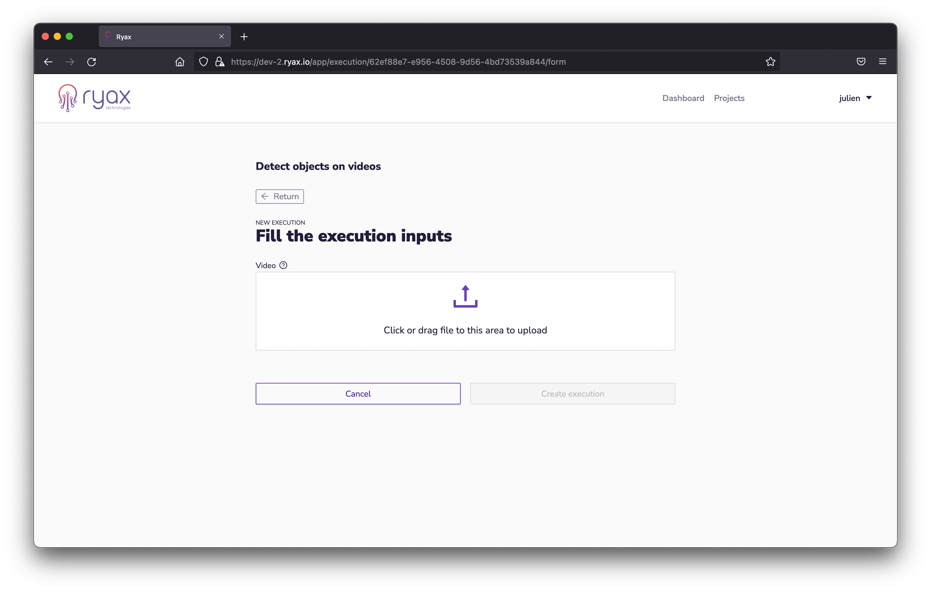

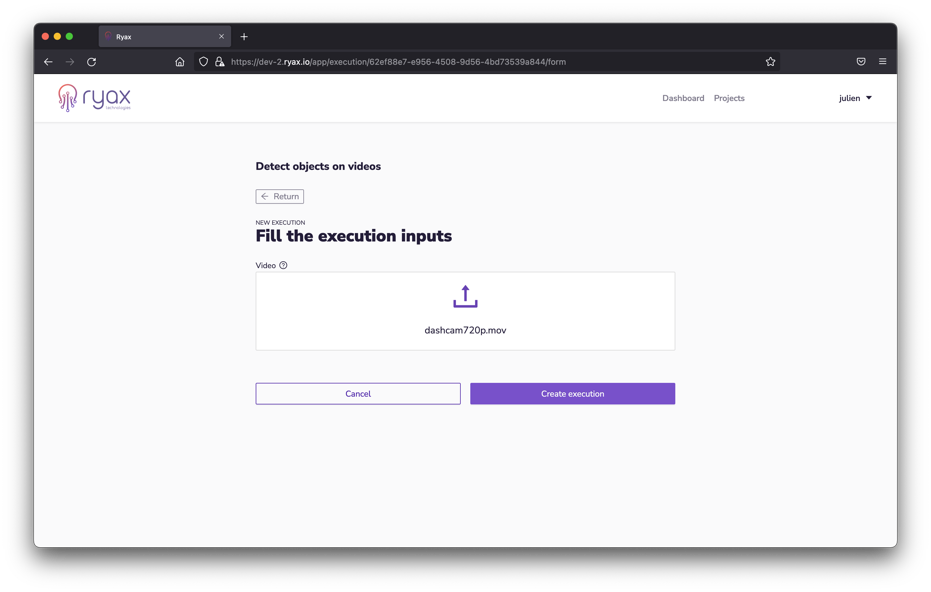

Click on “new run” to open the user form and generate our first workflow run. This is the page the user will see:

It is time to upload a video to trigger the backend workflow and test it. You can use the provided sample video below:

Once you added the video to the form, you can click on “Create execution” to run the object detection on that video.

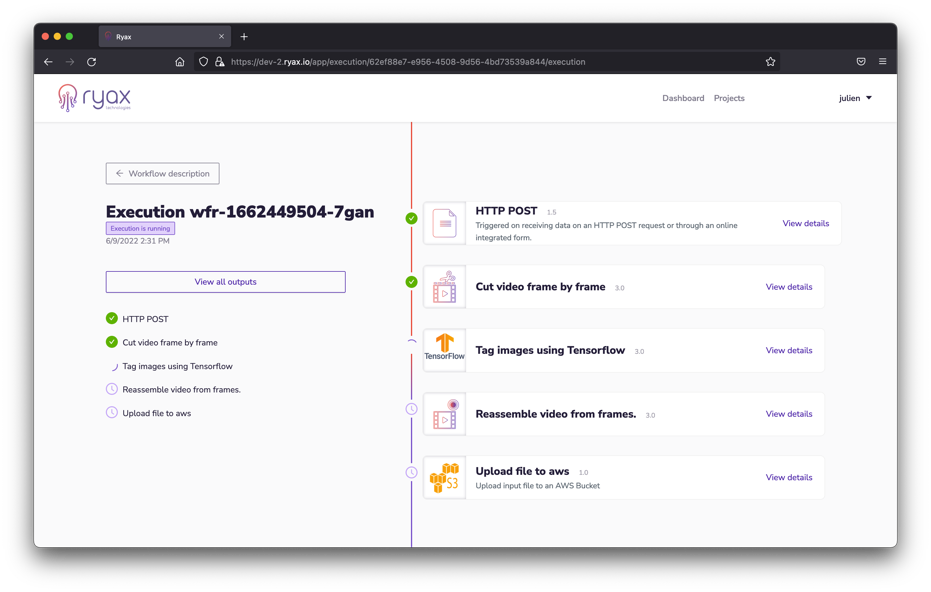

You’ll then be taken back to the execution page, where you can observe the workflow running and propagating through all the steps we defined:

3. Checking executions

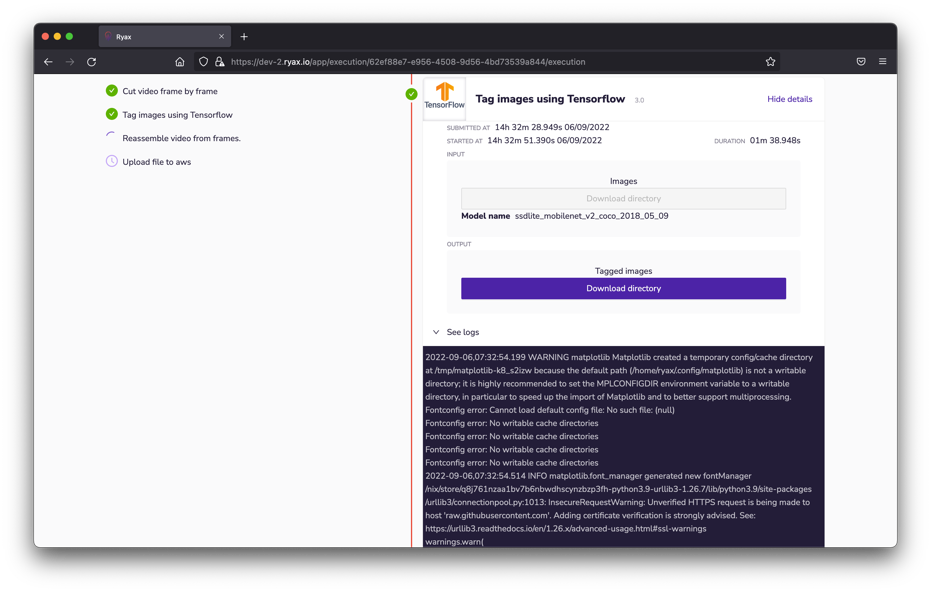

In the execution pages, each workflow step can be expanded by clicking on “View details”. Expanded views will show you runs timestamps, durations, input and outputs, as well as logs.

What’s really handy with these views is you can download input and/or output data to check each and every step of your workflow. Being able to see step-by-step changes brought to inputs is very valuable in understanding/debugging/improving any backend workflow. For example, you can download the entire tagged frame folder which is output of the object detection algorithm.