Introduction

As AI and large language models move into production, GPU-accelerated Kubernetes clusters have become the foundation of modern AI platforms. To support model training, inference, and end-to-end workflows, organizations continue to invest heavily in GPU, CPU, and memory resources.

At the same time, a growing reality is becoming impossible to ignore: a significant portion of this expensive infrastructure is wasted. Industry analyses consistently show that GPU utilization in AI environments is far lower than expected, while CPU and memory in Kubernetes clusters are routinely over-reserved. Underutilized GPUs have emerged as one of the most costly and overlooked sources of cloud waste (Quali).

The financial impact is substantial. A single high-end cloud GPU often represents thousands of dollars per month, and multi-GPU nodes can quickly reach tens of thousands of dollars in monthly spend. According to a ClearML report, large portions of GPU capacity remain idle due to poor allocation and manual management practices, leaving organizations paying for compute they never use (ClearML via Digital Journal). Kubernetes further amplifies this problem: to avoid failures, teams commonly over-request GPU, CPU, and memory resources “just in case,” locking capacity that delivers no additional performance (The New Stack).

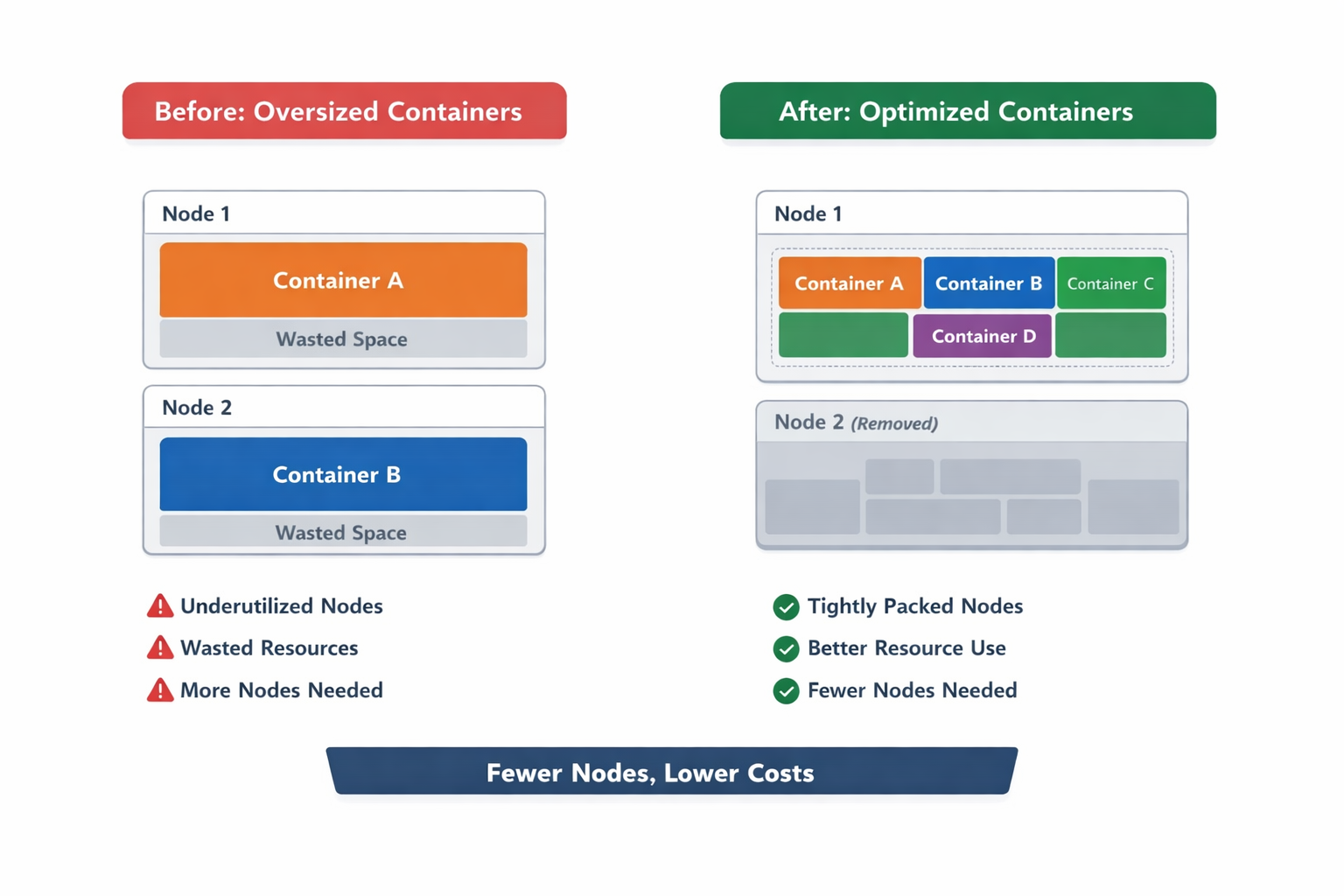

This is exactly where Ryax IntelliScale makes the difference. By intelligently reducing container sizes to what workloads actually need, IntelliScale improves resource utilization not only at the container level, but also at the node level. Right-sized containers pack together more efficiently, reducing fragmentation caused by conservative requests. The result is fewer partially utilized nodes, a lower overall node count, and meaningful infrastructure cost savings. Users simply define an initial container size and adjust a single slider to express their preference between maximum performance and maximum cost efficiency - Ryax automatically handles the rest.

1. From user intent to optimal execution: IntelliScale in action

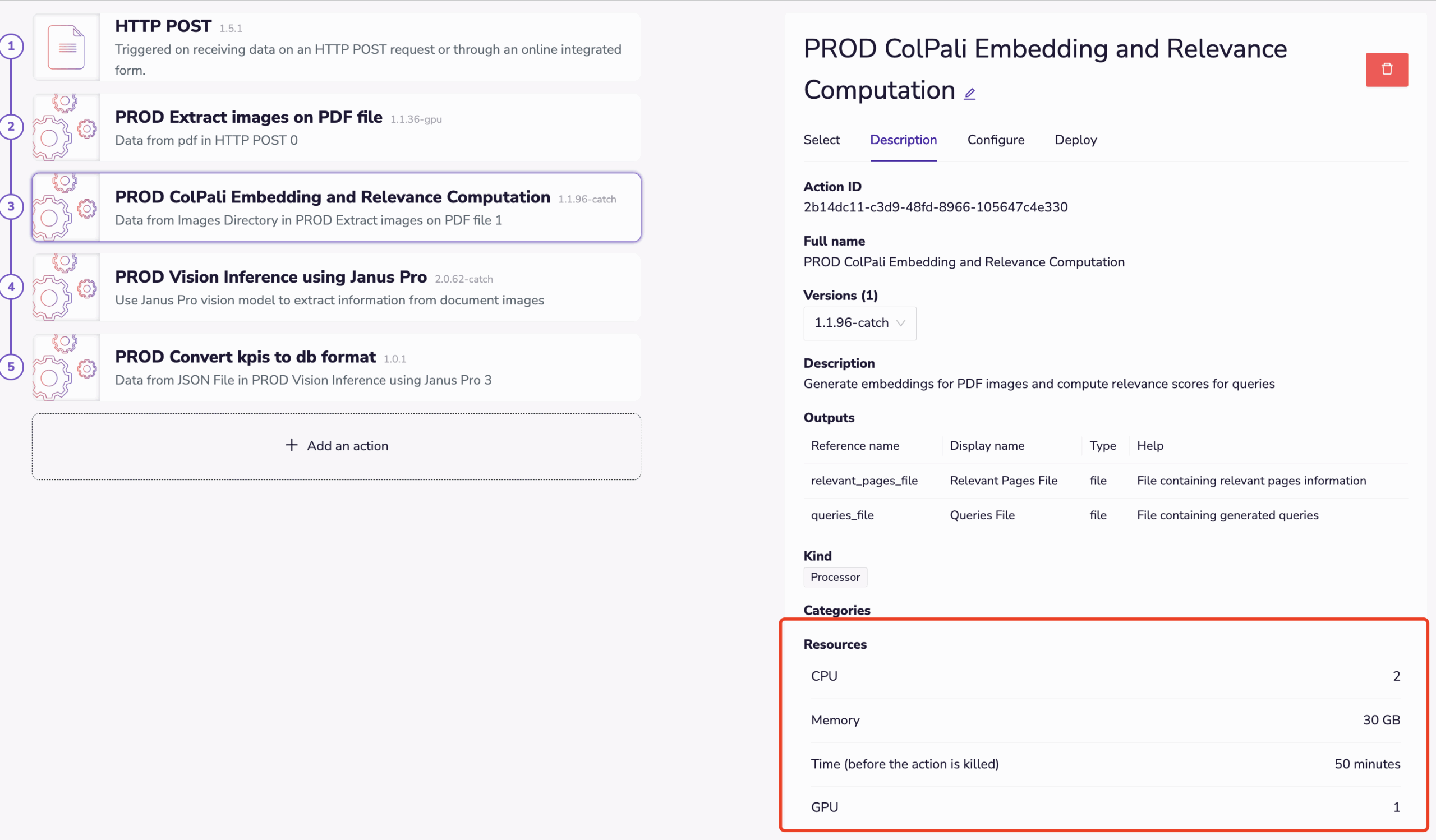

To illustrate how Ryax IntelliScale works from a user’s perspective, let’s look at a real-world AI workflow built on Ryax: extracting key performance indicators (KPIs) from annual report PDFs. This workflow consists of multiple actions, including document parsing, embedding generation, and vision inference. Here, we focus on the third action, where a Colpali model generates image embeddings: a compute-intensive step requiring GPU, CPU, and memory, executed on Kubernetes nodes equipped with NVIDIA GPUs.

When defining the resources for this Colpali embedding action, the user experience is intentionally simple.

First, the user defines the container’s CPU and memory using familiar Kubernetes-style settings. In practice, users often choose generously sized values to ensure stability and avoid runtime failures (and that’s perfectly fine). Even if the initial configuration is larger or smaller than what the workload truly needs, IntelliScale observes execution behavior and corrects in either direction over time. At this stage, the user also specifies that the action requires one GPU, without worrying about its exact size. Whether the workload should use a full GPU or a smaller NVIDIA MIG slice is left to Ryax to determine based on real execution data.

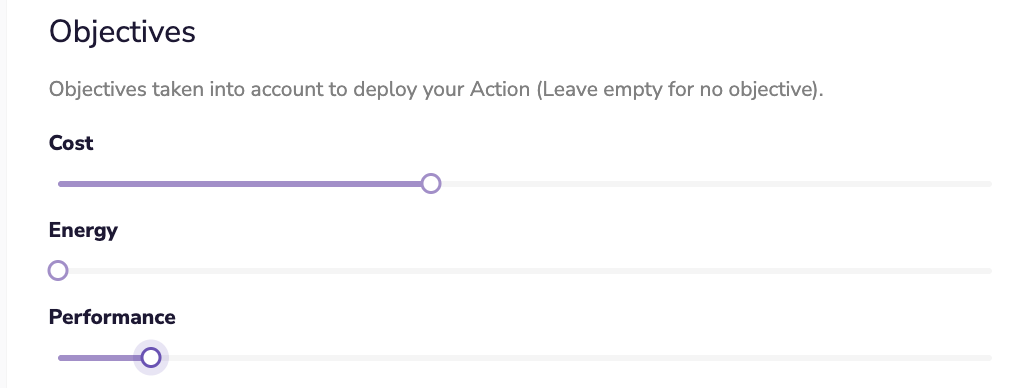

Second, on the action’s deployment page (see snapshot below), the user expresses intent by adjusting a simple cost-versus-performance slider. This single control captures a key business trade-off: completing the task faster by allocating more GPU resources, or minimizing GPU cost by accepting longer execution time. This preference directly guides IntelliScale’s decisions, including the selection of GPU MIG slice size. The same signal is also used by Ryax to decide where the action runs across available infrastructure, ensuring consistent performance and cost awareness throughout the platform.

That’s it. Pick values that feel right, move the slider to match your priorities, and hit run. There’s no need to be exact: IntelliScale learns and improves automatically.

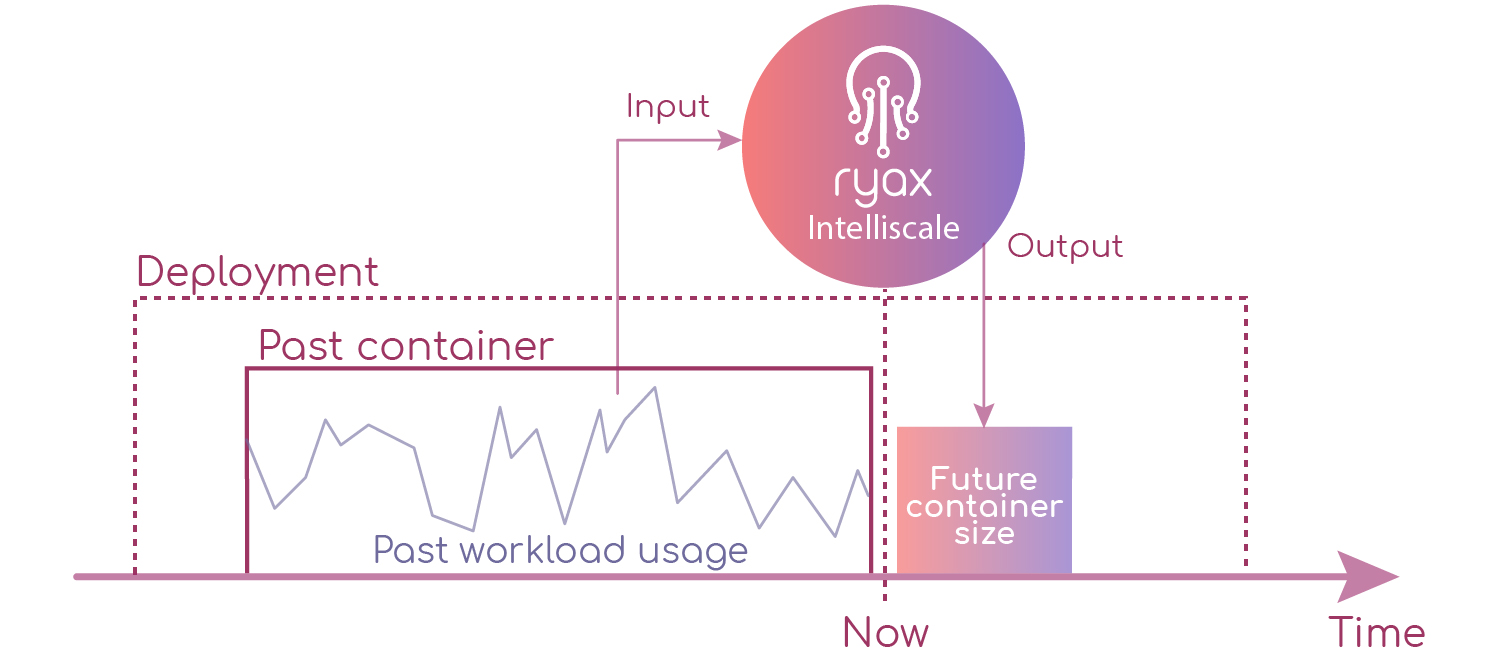

During the first execution, Ryax typically runs the action with the user-defined container settings and assigns a full GPU. Meanwhile, IntelliScale collects detailed runtime metrics across CPU, memory, and GPU usage. When the workflow runs again (even with different input data) IntelliScale can already generate a data-driven recommendation. The action then executes with a more accurate container size and a more appropriate GPU slice.

After a few executions, these recommendations naturally converge. Even if workflow behavior changes over time, no user intervention is required. IntelliScale automatically detects shifts in execution patterns, briefly explores updated configurations, and quickly re-converges to a new near-optimal allocation, while the workflow continues to run.

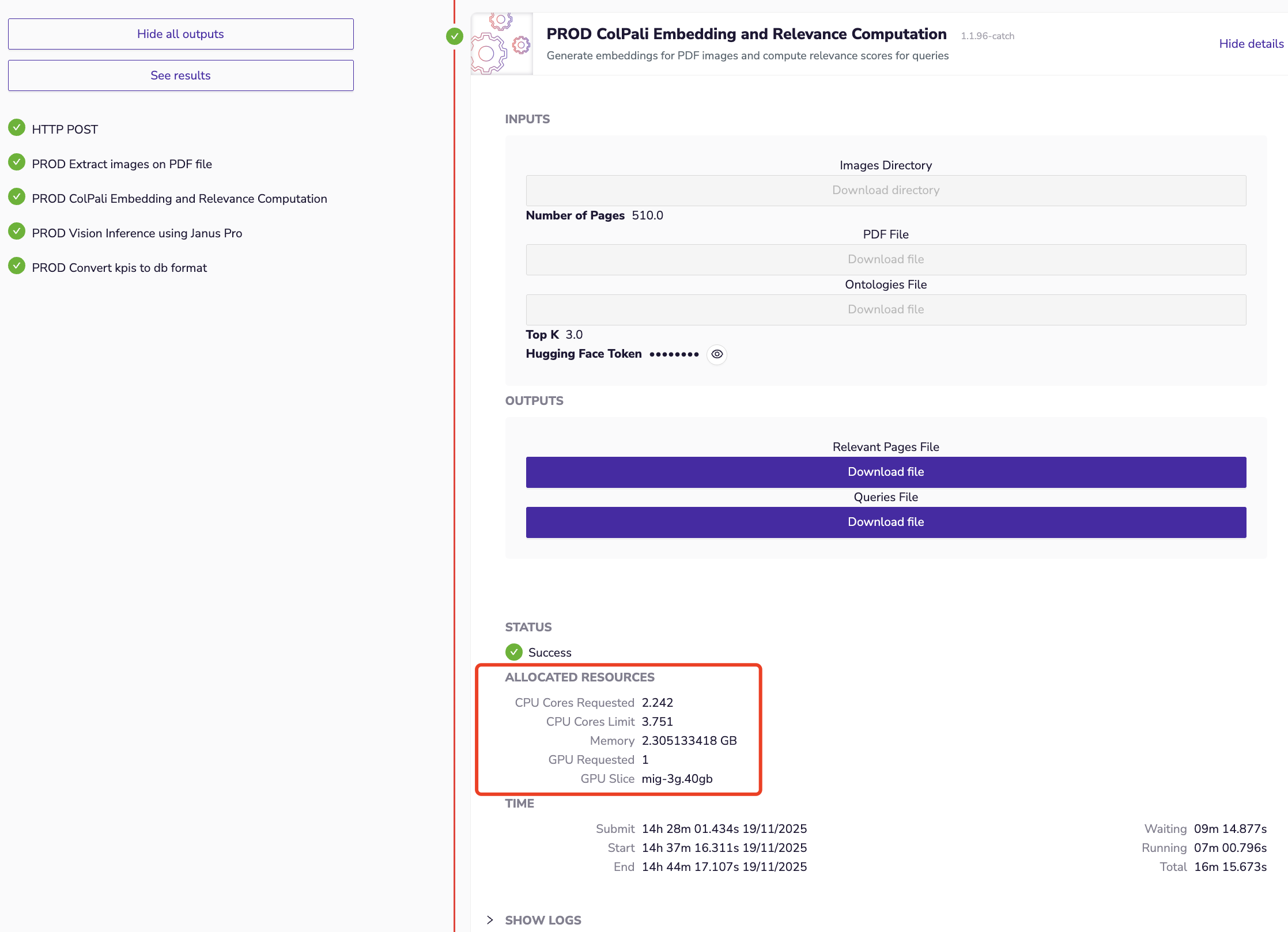

As shown below, after two convergence runs, IntelliScale recommends a CPU request of roughly 2.2 cores and a CPU limit of about 3.7 cores: slightly higher than the original 2 cores. Memory usage stabilizes just above 2 GB, far below the originally defined 30 GB. On the GPU side, IntelliScale selects a 3g.40gb MIG slice, representing roughly half of a full GPU. The remaining GPU capacity is immediately available for other actions, improving overall cluster utilization and reducing the total number of required nodes.

In short, Ryax IntelliScale relies on just a small number of intuitive user inputs, then continuously optimizes execution based on real workload behavior: automatically, transparently, and at scale.

2. Behind the scenes: How Ryax IntelliScale works intelligently

At its core, Ryax IntelliScale is a vertical autoscaling engine designed for Ryax workflows, not traditional clusters.

Unlike autoscalers that react at the node or pod level, IntelliScale operates at the workflow action level. For each action execution, it collects fine-grained runtime metrics across CPU, memory, and GPU usage, and leverages the full execution history to predict more accurate resource requirements for the nextexecution. These recommendations are forward-looking, execution-aware, and continuously refined over time.

This process happens action by action, transparently, without requiring users to change how they design or deploy workflows.

2.1 One goal: less guesswork, better efficiency

Different resources behave differently, and IntelliScale is intentionally built around this reality.

2.1.1 CPU and Memory: learning the right size

CPU and memory are capacity-bound resources: performance remains stable until limits are reached. For these resources, IntelliScale focuses on fitting, not throttling.

It uses a lightweight hierarchical prediction model that learns from past executions at multiple levels, including action-level behavior, workflow context, and historical patterns. Depending on configuration, this model can rely on fast, deterministic heuristic rules or a machine-learning-based variant that adapts more aggressively as data accumulates.

The objective is straightforward: converge container requests and limits toward real usage, eliminating unnecessary safety margins that quietly inflate cluster size.

2.1.2 GPUs: optimizing cost vs. performance

GPUs behave very differently. Once a GPU is active, it is effectively saturated, shifting the problem from capacity planning to a trade-off between execution speed and cost.

To address this, IntelliScale builds a performance profile of the workload using a roofline-inspired modeling approach, capturing how performance scales with available GPU resources. It then applies multi-objective optimization to balance execution time, GPU cost, and the user’s explicit performance–cost preference.

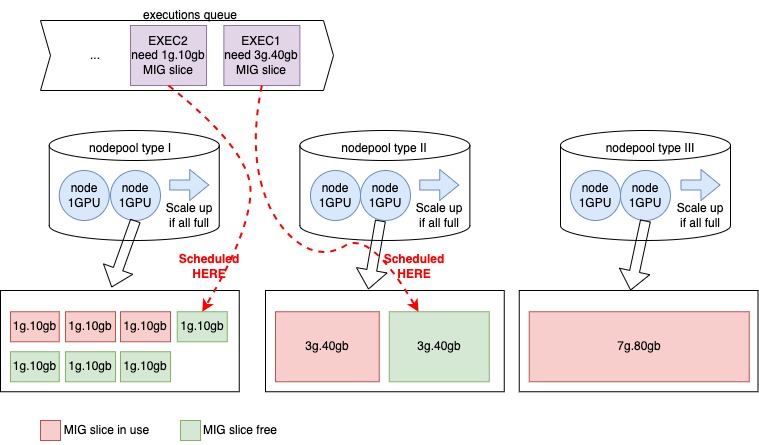

The outcome is a concrete, actionable decision: selecting the most appropriate NVIDIA MIG slice for the next execution, whether that means a full GPU or a fractional slice.

2.2 Designed to handle the unexpected

Even with accurate models, the future is never fully predictable. Inputs change. Models evolve. Users may also start with conservative (or overly small) resource configurations.

IntelliScale is built to handle these situations safely. When an execution exceeds its resource limits and triggers an out-of-memory event (CPU or GPU), IntelliScale responds immediately by temporarily increasing limits just enough to allow the execution to complete. These events are treated as high-value learning signals.

The model then adapts and rapidly re-converges toward a stable configuration. Crucially, users never need to intervene, even when workload behavior changes significantly.

2.3 A platform that amplifies IntelliScale

Ryax IntelliScale delivers its full value because it is deeply integrated into the Ryax platform. Ryax provides:

- Real-time, high-fidelity performance metrics collected via Kubernetes-native components (kubelet, cAdvisor, etc.)

- Persistent execution histories used directly as training data

- Isolated, independently scheduled workflow actions, each running in its own container

This architecture enables checkpointing of intermediate results and seamless retries of failed actions without restarting entire workflows. As a result, recovery from OOM events is fast, and IntelliScale can immediately apply updated recommendations.

2.4 From optimization to real cost savings

Optimization only matters if it translates into fewer machines and lower cloud bills.

Ryax closes this loop by combining IntelliScale with advanced scheduling and node pool management. GPU nodes are grouped into node pools based on MIG configuration, and workloads are scheduled onto the pool that best matches their predicted GPU slice size.

Each node pool can scale independently using standard cloud autoscalers. This turns IntelliScale’s per-action recommendations into real bin-packing advantages: reducing fragmentation, lowering node counts, and delivering tangible cost savings.

In internal evaluations using a Google Cloud Trace-based simulator, IntelliScale achieved:

- 33% reduction in node count for CPU- and memory-bound workloads

- 31.2% reduction for GPU-intensive workloads

All without increasing operational complexity for users.

Conclusion

Ryax IntelliScale redefines how resource optimization should work for modern AI workflows.

Instead of pushing complexity onto users, it transforms a handful of intuitive inputs into continuous, data-driven optimization. Instead of static sizing or manual tuning, it adapts automatically as workloads evolve. And instead of theoretical efficiency gains, it delivers measurable infrastructure savings - especially for GPU-intensive pipelines.

The result is simple: users focus on workflows and outcomes, while Ryax handles resources intelligently, automatically, and at scale.