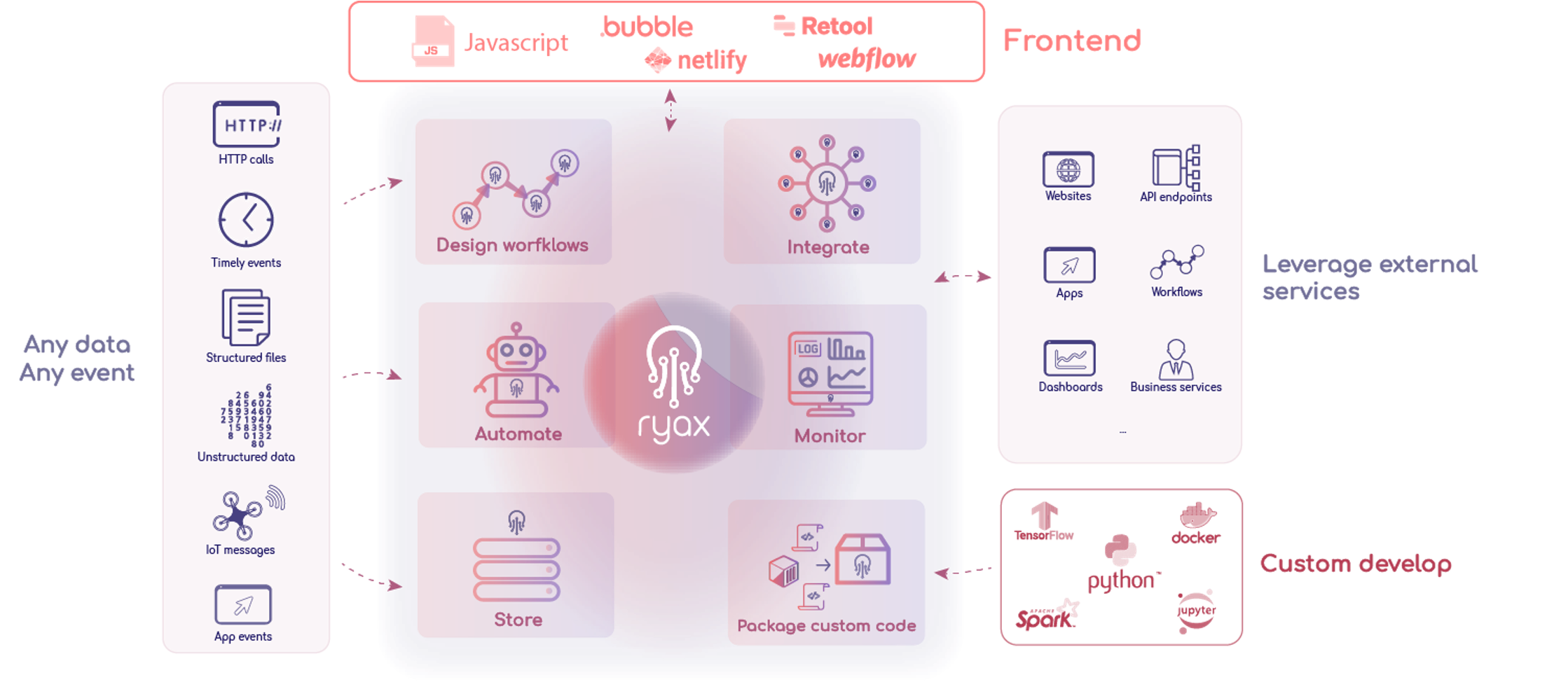

Deep dive into Ryax’s serverless technology

Running backends implies being able to scale operations up and down depending on demand, thus allowing for optimized infrastructure costs and overall resource preservation.

In RYAX platform, we use Serverless principles to achieve that, but without our users having to do the heavy lifting themselves.

On top of this, we use an "hybrid serverless" approach, since we're bridging the serverless and containers worlds to be able to run all kinds of backend processes. From the super light functions commonly preferred in serverless computing, to the heavier models needed for Machine Learning.

'Standard' serveless implementation usually involves using custom runtimes (like AWS Lambda or AWS Fargate) and waiting for predefined events to trigger and run very lightweight functions. In this paradigm, users are also responsible to create the software's packaging themselves, and deploy the whole topology of services needed by the application (API gateways, object storage, databases, etc.).

This manner of proceeding implies 3 very important issues:

- Users remain heavily involved in the process, and need a strong skill set plus experience in serverless technologies.

- Limiting oneself to very light functions is also a problem when trying to work with computations that lay out of the realm of 'standard serverless usage' (e.g. heavier computations).

- Users will often have to use solutions specific to a given cloud provider, thus limiting their freedom to quickly adapt to environment constraints, specific customer needs, etc.

1. A low-code approach to serverless

At Ryax, you may already know that we chose the low-code route for our users. So we abstracted the whole deployment part. Ryax isolates and packages the user's code properly, builds it with its CI/CD, orchestrates and efficiently deploys it in production. Ryax takes care of the whole packaging process based on a single requirements.txt file (for Python code).

Advanced users can also use the Nix package manager for more complex operations.

2. Leveraging "hybrid" serverless for broader applications (including AI, ML...)

We also deemed very important to fully support any kind of triggering events, any kind of workflow (including AI and Machine Learning) and on any kind of infrastructure ( it makes use of Kubernetes orchestrator but it is agnostic to the underlying Cloud or on-premises flavour).

This is of course tricky, since the Serverless principles are often associated with very lightweight functions and processes. How do we run more complex and heavier tasks? In the Machine Learning world for example, Data Scientists tends to use large containers embedding models and necessary data, and this approach is pretty hard to align with serverless requirements and may even seem quite antagonistic.

So here's the solution we've come up with to seamlessly execute a broader range of services - light and heavy - on serverless-like backend systems, which we like to informally call "hybrid serverless":

- Before the function is actually called for the first time, the service is not instanciated and will only start upon the first request. This is made to preserve resources while the service is not yet needed.

- Upon the first request, the platform will start deploying a container embedding the function. As the container's deployment process may take some time on top of the actual function run time, this service's very first execution will be slower than the future runs, so that's something to bear in mind for this kind of approach.

(BTW, we're working on speeding up the cold start delays, on one side by finding the optimal moment to deploy a container considering the actions' order in the workflow, and on the other side by improving Kubernetes internal scheduling by considering container's layers locality, developed in the context of PHYSICS EU project ) - When the first request ends, the service's container will remain in a "pre-loaded" state where it is not actively running but can receive subsequent calls without it having to rebuild and redeploy. Hence, subsequent calls will run very fast as the container is ready to be used.

- There is no max time of execution per function, each function can continue as long as it is needed.

- By default, after some time of unutilization (which is configurable by the end user) the container is automatically undeployed to minimize the resources usage and costs. In case, particular services are needed to be always deployed to address some particular application requirement this is possible through specific configuration.

This system allows for very short response times similar to pure serverless approaches, while still permitting the run of much heavier applications common to data science fields like Machine Learning agorithms.

The event triggers are just a specific kind of actions, running as services inside RYAX: You can bring your own event because you are coding it (pull/push/listen/...) The exchange of information and data between actions in a workflow is done through inputs and outputs. In RYAX actions' inputs and outputs are managed for you, including files and directories, through a tight integration with an object store. This allows actions to remain stateless and end-users to avoid the burden of implementing the integration to an object store which is typical when using services such as Amazon Lambda.

3. Remaining cloud agnostic

RYAX platform has been developed to be cloud agnostic.

For this, we chose to use the container orchestration standard: Kubernetes, which is available on any cloud provider (AWS EKS, Azure AKS, GCP GKE, ...), and can be deployed on premise.

This allowed us to build our custom, workflow-based, "hybrid serverless" stack upon each different type of Cloud using the same standard Kubernetes API.

Connect to our Discord to continue the discussion !